Can You Trust Movie Critics?

Ever since The Last Jedi was released Rotten Tomatoes Audience Scores, Cinemascores and whatever professional critics had to say was hotly debated. There has been a distinct feeling among quite a few moviegoers that whatever the Tomatometer or Metascore says is far removed from what the fans think about a movie. Cinemascore is something that is usually cited as a highly scientific and accurate gauge. But for what? Audience satisfaction? In this article I will try to examine if the aggregate scores by professional movie reviewers on Rotten Tomatoes and Metascore are actually far removed from what the fans feel. Click through to learn some surprising things!

If you are really, really lazy and don’t want to see several nice plots you can scroll ahead, there will be a bottom line further below. But if you stay and actually look at some results and plots, you will find out some interesting things!

First of all, a quick reminder of what the previous article that investigated the influence of user scores on actual box office results found.

Any score has only a moderate correlation with final box office. Which means if your movie has a Cinemascore of “A” or a Rotten Tomatoes Audience Score of 85 the movie could be huge success or a grand flop. Cinemascore is no better than any of the user or aggregate scores by movie critics in predicting the actual final box office. I also tested the Tomatometer, Metascore and Metacritic User Score in the meantime, and all of these scores only have a moderate correlation of about 0.5, which means there is a trend, but it’s not very accurate.

It was also found that Cinemascore and the Rotten Tomatoes Audience score have a strong correlation. Why is that important? It means the RT Audience Score is not bogus, it’s legit, always provided Cinemascore is based on good science.

Before I further investigate the Tomatometer and Metascore I will give you one final fact about various user scores, professional scores and Cinemascore. While none of them can really predict with any certainty the final box office of your movie Cinemascore stands out from all the others.

Cinemascore is the only score that can give good predictions about your chances at the box office. In other words: the lower your Cinemascore the less and less unlikely it will be that a movie will have a high box office. Cinemascore stands out from all the other scores, since even a movie with a user score of 47 or 53 can still be a huge financial success. No Cinemascore movie with a “C” or “D” grade will ever do the same. Cinemascore is a very useful predictor for your chance at winning at the box office!

Here’s the table with all the probabilities. I increased the sample size, I also included movies from 2015-2018 and overall sample size is now almost 400 movies. Please click the table for a much larger version.

As you can see Cinemascore has a curious characteristic. An “A+” movie has worse chances at becoming a huge box office success than an “A” movie. This is because some niche movies have “A+” scores, various Tyer Perry comedies, for example. Most huge blockbusters don’t get “A+” scores. There are two exceptions. “The Avengers” and “Black Panther”.

Only very few “A-” movies make more than 300 million at the box office. Actually, “A-” movies have a good chance at tanking at the box office. And if your movie is a “B+” you already know your big blockbuster is in trouble. When Justice League got a “B+” Warner Brothers knew on Friday night their movie would most likely not make much more than 200 million. Anything below a “B+” is a disaster for any blockbuster. “B” movies mostly make not more than 100 million.

You can also see why a Cinemascore is not so much about audience popularity or opinion, it’s a gauge for potential box office success.

And this is the bottom line: Cinemascore is a useful gauge to predict whether or not a movie has a chance at making big money at the box office. It’s not so much about perceived quality. This is why Transformer movies or Batman Forever all have high Cinemascores. They had the draw and the power to lure people to theaters. But the Cinemascore says not so much about if people who went to see the movie actually liked it.

Therefore a Cinemascore of “A” for The Last Jedi made a prediction about box office success (and it won the jackpot) but is not an actual gauge for audience satisfaction.

With that being said we can move on and investigate if professional critics are actually removed from reality. What is your feeling? Do you think professional critics, on aggregate, are all bought by Disney, Warner, or Rupert Murdoch and write shill reviews in order to push agendas or box office? Let’s examine the scores and find out what is going on!

First of all: since the last time I added a lot of movies to the database. I now have almost 400 movies in my list. I included movies from 2015 – 2018 to see if review bombing or shill reviews are actually happening and if it’s widespread. I made sure to include movies with diverse actors to see if something weird happens with them on Rotten Tomatoes and Co.

It will get somewhat technical, but don’t worry. You mostly get to see nice graphics and these are actually very easy to interpret, I will tell you how to interpret the plots.

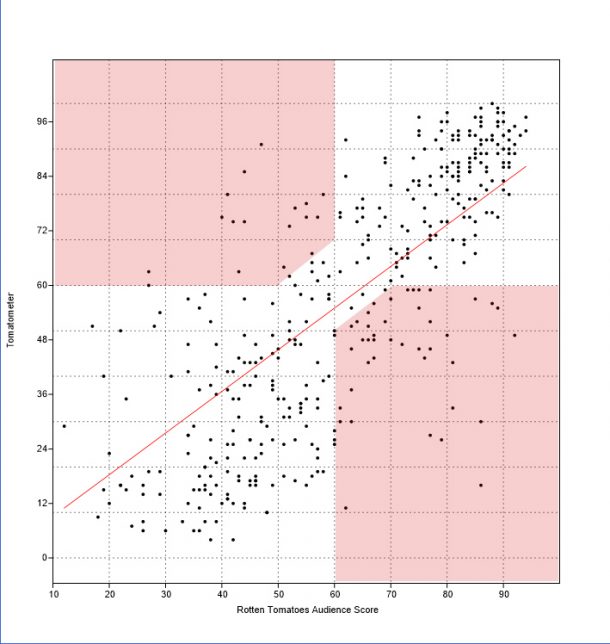

So, first up is Rotten Tomatoes. In the following plot you will see the correlation between Rotten Tomatoes Audience Scores and the Tomatometer:

What do you see here? Each of the black dots is a movie. In an ideal world the black dots should be close to the red line. As you can see the overall trend is very similar for the Tomatometer and the Audience score. This means a movie with a high Tomatometer usually also has a high audience score and vice versa.

The red sections on the graph are the problem zones. Any movie in this area had it’s score changed from “fresh” to “rotten”. In the upper left corner are the movies with a high Tomatometer that have a low audience score. In the lower right corner are the movies that have a low Tomatometer but a high audience score.

You can see that most movies are ok, only some show anomalies.

So what is the takeaway here?

- the Tomatometer does reflect what the audience on Rotten Tomatoes feels

- there are some outliers, but not many.

- 12.98% of all the movies have a fresh audience score but a rotten Tomatometer, meaning the audience loves the movies more than the critics.

- 4.58% of all the movies have a fresh Tomatometer but a rotten audience score, which means the audience didn’t like the movie, but the critics did. Among these few movies are “The Last Jedi”, “Hail Caesar!” and “Haywire”, but not many more.

- When critics love a movie, they REALLY love it, there are movies with a 100% Tomatometer. When they hate something, they REALLY hate it, there are some movies with single digit Tomatometers. The fans are less extreme, but overall opinion still aligns.

The main takeway is that review bombing a movie with a high Tomatometer is not really a thing. Some movies with a low Tomatometer have a much higher audience score. When you look at the movies that are affected you will see some interesting patterns:

- “guilty pleasures” such as the Fifty Shades movies, the Transformers franchise or the Pirates of the Caribbean movies are among the movies with high(er) audience scores and low Tomatometer. Even a fan of these movies would likely admit that they are not “objectively” great movies.

- Movies that are based around faith or religion have a low Tomatometer but a much, much higher audience score.

- DCEU movies. They are disliked by critics. And this is one of the few instances on RT where you can say that the fans might try to counteract a low score on principle.

What about review bombing of movies with a diverse audience or with a primarily female cast? Does not exist on Rotten Toamtoes. So any racists, bigots or misogynists are either a tiny minority on RT or use other platforms.

The sole and sad exception is “The Last Jedi”. But since no other movie has this effect I doubt it’s misogynists or racists downvoting the movie. Or else they are highly selective. Because movies such as Get Out, Hidden Figures, Moonlight, Girls Trip, Black Panther etc don’t show this effect and audience scores align very well with the critics.

So the Tomatometer is actually a good gauge whether or not the audience on Rotten Tomatoes will actually like a movie or not. And if you keep in mind that critics don’t like guilty pleasures so much or DCEU movies you can usually trust the Tomatometer to agree with the majority of fans.

Ok, what about Metacritic? Metacritic works similar. It has a Metascore which is an aggregate score calculated by analyzing professional reviews and and it has a user score.

Metacritic is also different from Rotten Tomatoes in some regards; it’s not solely focused on movies. And the user base is much, much smaller. On Rotten Tomatoes you have several thousand to several hundred thousand votes per movie. On Metacritic you have a few dozen to maybe a few thousand user votes per movie. Will this make a difference? Yes!

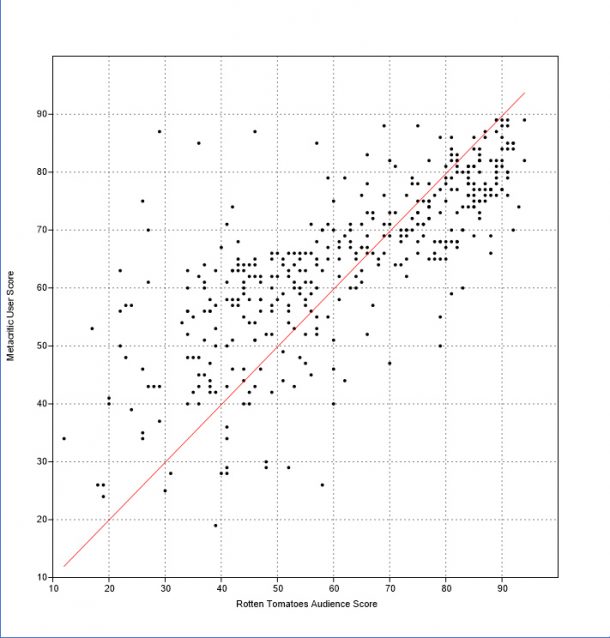

But let’s look at the correlation between the Metacritic User Score and and Metascore:

Once again the black dots are movies. You can see that the overall trend is pretty similar, hence the strong correlation. Once more the movies in the red areas are the problematic ones, these are the movies where a good rating becomes a bad rating and vice versa. In the upper left corner are the movies with a high Metascore and much lower User Score and in the lower right corner are movies with a high User Score and a much lower Metascore.

So, what is going on here? Overall Metacritic has a good correlation but if you compare the two plots for RT and Metacritic you can easily see that Metacritic has many more “problem” movies. Why is that? What is going on here?

- overall the Metascore by professional critics does reflect what the users on Metascore feel.

- however there are significantly more outliers on Metascore.

- 30.53% of all movies on Metacritic have the equivalent of a “fresh” user rating but a “rotten” Metascore. That is almost a third of all movies! Users upvote movies a lot on Metascore.

- 2.8% of all movies in the sample have a “fresh” Metascore and a “rotten” User Score, so review bombing movies that are popular with critics happens very rarely. Among them are “The Last Jedi”, the 2016 “Ghostbusters” and “Spy”, but not that many more.

So Metascore has some strange things going on for roughly 1/3 of the movies where the users rate the movies significantly better than the critics. Compare that to the roughly 13% or 1/7 on Rotten Tomatoes.

The explanation is quite simple. Since Metascore has so few users some of the movies only have a couple of votes. You can see tampered reviews quite easily, or, to put it more friendly, where the aunt, mother, uncle, father and various studio execs might have voted for their movie…

Among the movies with curiously high user scores and really low Metascores are “Speed Racer”, “Mr 3000”, “Dream House” or “Diary of a Mad Black Woman” (However, this movie is also an A+ movie on Cinemascore and is highly rated on RT as well by users).

Between Metascore and Rotten Tomatoes there’s not so much overlap when it comes to upvoting or downvoting movies. Other than DCEU movies or some of the guilty pleasures. And one movie that is also disliked on both platforms is “The Last Jedi”. It’s an outlier on both websites.

Of note is that I found one rare occurence of a movie with a diverse cast to have a bad user score but a good critics score, “Girls Night” on Metascore has a curiously low User Score. It has a high Audience Score on Rotten Tomatoes and overall good scores. Maybe someone tried to downvote the movie on Metascore, or it may very well be an outlier. That being said Metascore is much more susceptible to tampering because of its low number of user votes. And this effect seems to be at work for some movies. Mostly some smaller movies, not so much the big titles.

What is the bottom line? Aggregate scores on RT and Metascore are more or less in very good aligment with user scores. Due to Metascore’s much lower user base the site is more prone to having outliers, sometimes some user scores seem to have been tampered with.

I will now show you some more additional scatter plots to show you how well various scores correlate.

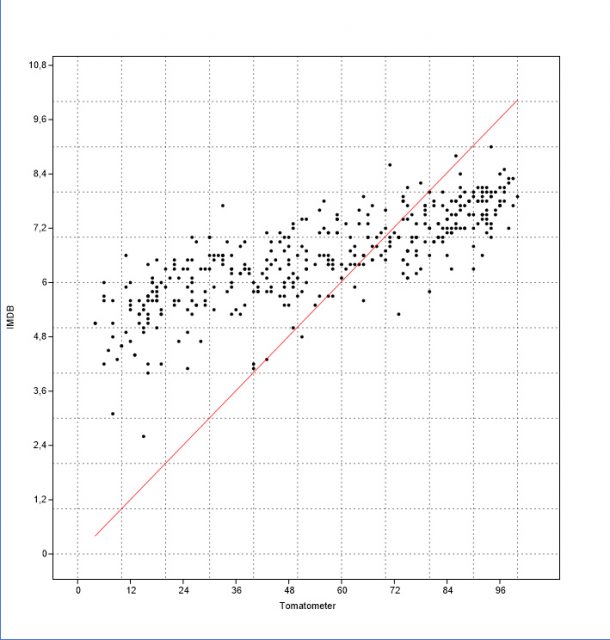

First up Tomatomater and IMDB

IMDB is less extreme than the critics, but then again, they are pretty extreme on RT, which means they go very low and very high, but overall the trend is almost identical, meaning a low Tomatometer almost always corresponds to a low IMDB user rating.

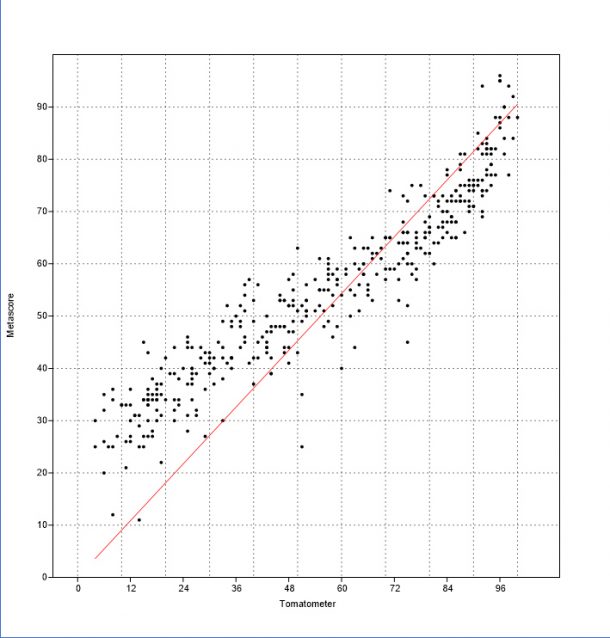

And now the Tomatometer and Metascore:

This is no surprise, the critics on Rotten Tomatoes and Metacritic agree almost perfectly! Which is to be expected since both sites share the same resources, some reviews differ, Rotten Tomatoes uses more professional reviews to calculate their Tomatometer, but overall the shared resources make sure correlation is almost perfect.

And now the Tomatometer and the Metacritic User Score

Correlation is good. What is curious here that correlation between the Tomatometer and the Metacritic User Score is ever so slightly better than for the sites’ own Metascore and User score!

How are things when you compare both User Scores on Rotten Tomatoes and Metacritic?

While correlation overall is really strong between the two – and you can clearly see the very similar trend – the various strong outliers catch your eye immediately. And most of them are in the upper left quadrant, meaning a movie with a low Rotten Tomatoes Audience Score has a very high Metacritic User Score. Potential reasons have been discussed: Metacritic has reviews with as few as two dozen user votes. And when the majority of them give a “10” then you know something is amiss here! The takeaway is that the Rotten Tomatoes Audience Score is simply more reliable and better. And remember; it’s the only user score in good aligment with Cinemascore too.

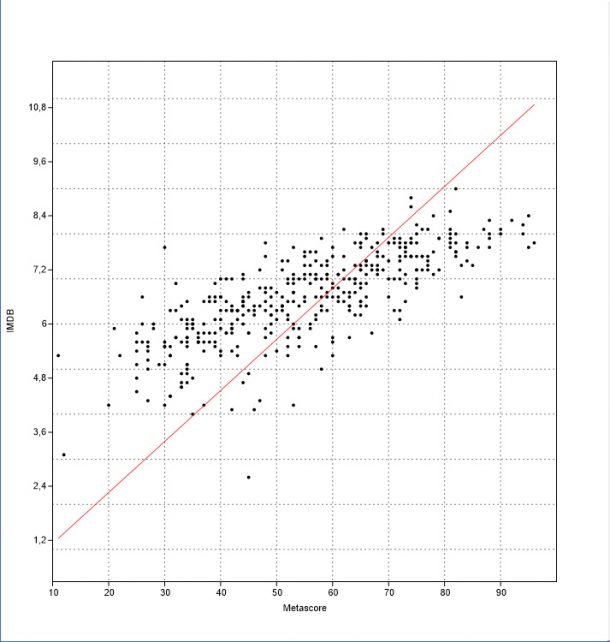

What about the Metacritic User Score and IMDB?

For the most part IMDb and the users on Metacritic agree on things. Only Rotten Tomatoes’ Audience Score is ever so slightly better correlated with 0.89. I showed you the plot in the previous article. You can also spot the various outliers, where movies with a good IMDB rating have a much lower user score and vice versa. A systematic problem the Metacritic User Score has for some of its movies.

And finally the Metascore and IMDB.

And to end things here’s a quick list of the correlation between Cinemascore and the various scores:

Correlation Cinemascore – Tomatometer: 0.5

Correlation Cinemascore – Metascore: 0.48

Correlation Cinemascore – Metacritic User Score: 0.46

Correlation Cinemascore – RT Audience Score: 0.72

Correlation Cinemascore – IMDB: 0.58

Correlation Cinemascore – Actual Score: 0.46

Correlation is moderate for all the scores but one, the Rotten Tomatoes Audience Score. Even after increasing the sample size from roughly 250 to almost 400 the correlation has not changed, indeed, it has become ever so slightly better even.

And now the bottom line. What can we take away from all this? And what can be said about The Last Jedi, once and for all?

- Tomatometer and Metascore are a very good representation of audience scores with some few caveats.

- Critics don’t like Twilight, Fifty Shades, Pirates of the Caribbean, Transformers or DCEU movies, audience scores tend to differ the most for these franchises and some other “guilty pleasures” but the vast majority of scores is absolutely in aligment!

- Rotten Tomatoes has the MUCH more reliable User Score. Metacritic is susceptible to outliers or maybe even tampering because of its very low number of votes, only a few dozen sometimes, even the most popular movies only have a few thousand votes on Metacritic.

- Movies with a diverse cast or a primarily female cast are not bombed by trolls. They are all ok with the exception of “Girls Night” on Metacritic.

- The aggregate critics scores are much better than their reputation. While a single movie critic might be a shill or not, the overall score is a really good gauge of what the audience feels about these movies on these sites.

- Cinemascore is an outlier, it’s very different than IMDB, Rotten Tomatoes or Metascore. Cinemascore is NOT so much about popularity but MUCH more about box office. Cinemascore is a great tool to gauge your chance of success at the box office. MUCH better than any of the scores, no contest. So Cinemascore is the only useful score for the movie industry when it comes to knowing your chances of having a hit movie. The audience or critics score will never tell you that. A movie with a 40s rating can be a huge hit. But once you have a “B” Cinemascore you know it’s game over for you.

- Cinemascore can’t be used to argue that people liked or disliked a movie. It’s more about how the marketing worked. IMDB, Rotten Tomatoes and with some restrictions Metacritic are a much better gauge of what the general audience thinks of a movie. But even then, there will always be outliers! And remember: user scores and opinions have little effect on actual box office. People will go see the next Pirates, Fifty Shades or Transformer movie no matter what and complain afterwards.

And now one final word about “The Last” Jedi. While it is true that its score is low on both Rotten Tomatoes and Metacritic, it is much better on IMDB. The low user score on RT and Metacritic is an absolute outlier. It can’t be argued that only 47% or so of all people liked the movie. Some effect is at work here. Probably not bots or trolls, this is, at least for RT, highly unlikely, the site has otherwise a very robust score and is in very good aligment with both the Tomatometer and Cinemascore. A clear indicator that Rotten Tomatoes is much better than its reputation.

My guess: once the media got wind that The Last Jedi had a curiously low score on Rotten Tomatoes, it created a feedback loop. People who would not normally vote felt motivated to vote to give their disappointment an outlet.

This is at least much more likely than Facebook trolls organizing a campaign or Russian bots sowing dissent.

And to end this very long article…. watch out for that “Solo” Cinemascore on May 25th! If it’s an “A” everything is fine and Solo could be a huge financial success. “A-” will mean Solo will probably not break even at the domestic box office (but global box office will see to it that it won’t be a flop).

I hope some of you at least enjoyed this lengthy article. At least we can now say that critics are not shills. That Rotten Tomatoes is actually a quite useful and accurate website and that Metacritic has some issues due to the much lower number of users. IMDB is also a good tool to gauge general opinion of users. There will always be outliers, anomalies and as soon as a user score is much, much lower than either the Cinemascore or some aggregate critics score something is amiss and you have to look for reasons what is going on. DCEU movies have fans who want to prove to the world that the movies are not as bad as critics say. And perhaps Star Wars has fans that want to prove to the world that The Last Jedi is a disaster. In neither of these cases can be said that a majority of fans actually feels that way. By my own estimates 80% of people like The Last Jedi and 20% dislike it. This can be gauged by looking at Cinemascore, the Tomatometer, Metacritic and IMDB.

And to answer the question asked in the headline: yes, you can trust movie critics, at least on aggregate when you look at the Tomatometer or Metascore. I named the few examples where opinions differ significantly. Fans of Bella and Edward, Christian Grey, huge transforming robots or male DC Superheroes (everyone loves Wonder Woman!) or movies with a religious theme may choose to ignore the critics.

Related Links

-Click HERE to return to the home page-