Cinemascore, Rotten Tomatoes And IMDB - An Investigation

Ever since The Last Jedi was released and the Rotten Tomatoes Audience Score dropped to lower and lower levels it was argued that Rotten Tomatoes is unreliable, prone to tampering and that services such as Cinemascore are highly scientific and much more accurate, since they are also used by the movie industry. This article wants to investigate if various claims about Rotten Tomatoes and Cinemascore are true. Click through to find out more!

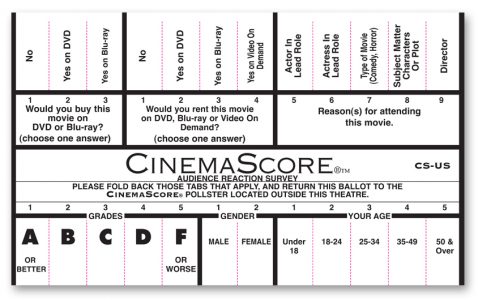

First a quick primer on how Cinemascore works. Each Friday Cinemascore conducts surveys in a handful of cities across the USA and gives moviegoers the pictured Cinemascore ballot. You simply fold the tabs you agree with and then these ballots are analyzed by the Cinemascore team. Their exact methods and statistics used are unknown but in the end Cinemascore posts their score, anything between A+ and F. A movie with a cinemascore of B or so is already a disappointment. Anything below B and the movie has real problems.

Cinemascore gauges several things. Did the trailers mislead people? Did the marketing for the movie get the right people to buy a ticket? People who thought the movie would be something entirely else happen to give low scores.

Cinemascore is more about right marketing and especially expected box office than actually grading the movie, which is why Cinemascore rarely has really bad scores. As and Bs are abundant, Cs are not as common anymore and Ds or even an F is a rare occurence. This is why some high Cinemascores for questionable movies sometimes confuse people.

Associated with each Cinemascore is a “box office multiple”. This is a factor which is used to predict final box office and the legs a movie will have. Will it drop quickly or will it be strong in the coming weeks? In 2014 Cinemascore disclosed these box office multiples to Deadline.

Using this table and the freely available Cinemascore databank – that will give the score for any movie for which Cinemascore conducted a survey – I gathered some data.

What I did is the following: I gathered the Cinemascores, Rotten Tomatoes Audience Scores, IMDB User Scores, final domestic box office and the actual multiple (i.e. final domestic box office divided by box office on opening weekend) for 249 movies. I selected some top movies, some which were somewhere in the middle and some that were really down low in the yearly Box Office Mojo box office charts to make sure I get a good variety of successful movies, movies that did ok and movies that had little success. I did this for movies released between 2004 and 2014 and only added three or four more recent movies, among them the new Star Wars movies.

After gathering all the data I conducted several statistical analyses. I am afraid it will get somewhat technical here but put very simply I investigated the following:

- does Cinemascore predict final box office?

- does Rotten Tomatoes predict final box office?

- does IMDB predict final box office?

- does the Cinemascore Box Office Multiple actually reflect reality?

I freely admit that the following graphs and numbers might be a bit technical. Feel free to skip this section and head right on to the conclusions if you don’t want to look at various scatter graphs.

To analyze the data I used the freeware Past which is mostly used in science for a quick and easy analysis of data. At the heart of everything is what is called “correllation”, i.e. are two variables somehow connected? If they are, then correlation is high (a “1” would mean perfect correlation). If not, then correlation is low (a “0” would mean there’s no correlation at all). There are various formulas for all kinds of correlation coefficients, for this case we have to use ranked statistics (the scores are ranks) and the Spearman correlation coefficient is used. Also calculated is a p-value, which is a gauge for significance, the lower the better. Testing for significance shows you whether or not the results you get are merely coincidence or not. I will not post the values here, but all the correlation coefficients are significant, meaning, they are not just coincidental.

Finally, I conducted a simple linear regression of the data and plot the variables using a scatter graph. This will give a quick visualisation whether the two variables are correlated or not.

With these methods I tested if Cinemascore is correlated with final box office. Does Cinemascore actually predict final box office within reason?

This is how you read this plot: the red line represents the linear fit, which means in a perfect world and if Cinemascores and final box office were highly correlated the black dots (each dot represents one movie) would cluster around the red line.

As you can see they do not.

I converted the Cinemascores to a numerical value. A+ is 15, F is 0. This circumvents the problem that several Cinemascores have identical box office multiples.

Correlation for Cinemascores and final box office is 0.56. This means the correlation is very moderate and actually not good at all. Anything below 0.5 is usually bad news. Ideally you want to have a correlation that is much higher than that. As a reminder, a correlation of 1 means “perfect”. 0 means there is no correlation at all. 0.56 is somewhat underwhelming, actually. Even the average box office does not even come close to the averages given by Cinemascore. They are much, much higher (due to outliers) and Cinemascore A+ movies actually make less money than A movies. At least in the years 2004 – 2014.

So, Cinemascores are a bad predictor for final box office. You can easily see in the graph that an “A” movie (14) can have box office results ranging from a few millions up to almost a billion.

Then I looked at how the actual box office multiples predict final box office. The actual multiples were calculated using the data available on Box Office Mojo, the final numbers and the numbers for the opening weekend are freely available there.

It’s all over the place. Even the actual box office opening multiples don’t really predict final box office. Correlation is 0.51, which is also moderate, bordering on low. It’s more or less identical to Cinemascore’s prediction, only slightly worse.

What does that all mean? It means successful movies, at least from 2004-2014, are usually much more frontloaded and have higher drop offs, thus a very successful movie can end up with a box office multiple that, using Cinemascore’s list, would be bad news. It also mirrors the current trend that movies only have a short life in cinemas.

Next, I looked at IMDB user scores and their correlation to final box office.

IMDB is also all over the place. Correlation is 0.53. Which is more or less on par with Cinemascore.

And finally, how does Rotten Tomatoes fare?

You can see a trend, but Rotten Tomatoes user scores are also not very good at actually predicting final box office. However, the correlation is 0.57 which is identical to Cinemascore’s correlation. In other words. Rotten Tomatoes predicts final box office as good or bad as Cinemascore.

Summary: predicting final box office based on user scores or predicted box office multiples is quite inaccurate, correlation is only moderate, and in the end Cinemascore, Rotten Tomatoes, IMDB and even the actual box office multiples have the same very moderate accuracy. But it should be stressed that the much maligned Rotten Tomatoes, despite all claims of tampering and trolling works just as well as Cinemascore.

So let’s look at how the Cinemascores correlate with the actual box office multiples. Since we already know that Cinemascore is not really good at predicting box office the following graph comes as no surprise.

There is somewhat of a trend, but correlation is actually low, it’s only 0.48 which is bad news, this is not a good result. You can see how the actual multiples differ significantly from what the Friday night Cinemascore survey would suggest.

So, once more we see that Cinemascore does not do a very good job of actually predicting the box office. Your movie with a score of A+ (4.8 multiple, grade 14) could very well end up having a multiple of only 2.3. The majority of Cinemascore A+ movies do worse at the box office than predicted. Indicated by the number of black dots below the red line. The majority of Cinemascores have this problem when you see how the movies in each category actually performed.

And then I wanted to know how well the various scores correlate. Is there any correlation between Cinemascores (which is calculated from feedback of moviegoers) and other movie user scores?

First up is Cinemascore and IMDB.

There is a trend but there doesn’t seem to be much of a correlation between the two. Correlation is only a very moderate 0.53.

How is it with Cinemascore and Rotten Tomatoes Audience Scores?

Things look quite scattered, but a trend is very clearly visible, much better so than with IMDB. And it may surprise some, but correlation is 0.7. Which is good. Not very good. But good. The Cinemascore scores and Rotten Tomatoes Audience Scores are a much better fit than Cinemascore and IMDB.

And now a look at Rotten Tomatoes and IMDB.

Now, this looks nice. Actually, correlation is high, it’s 0.87 which is very good! You can easily see how the graph shows much less scattering.

A few interesting things can be seen here. Users on Rotten Tomatoes tend to give lower scores to bad movies, whereas IMDB has higher user scores for bad movies. And the reverse is true for popular movies. Rotten Tomatoes tends to give higher audience scores for the good movies than IMDB. Which is an effect that probably many have seen at work or at least felt, IMDB always seems to be less extreme than Rotten Tomatoes. But still, both sites show very similar user behavior.

Next up I analyzed each and every Cinemascore individually. It would be too much to post the results in full detail but a few things can be seen:

- Cinemascore A+ movies make less money on average than A movies.

- Whereas for Rotten Tomatoes movies with higher audience scores always make more money on average.

- Of the 25 A+ Cinemascore movies only around 5 or so have a similar score on Rotten Tomatoes and only 10 of these A+ movies actually have the predicted box office multiple, the others are lower, sometimes even much lower. In other words, instead of making 4.8 times the money made on opening weekend, they make much less.

- For any given Cinemascore the empirical standard deviation (error) for the average actual box office multiple is always quite high. It simply means that very few movies actually make the box office predicted by Cinemascore and box office fluctuates wildly across all scores.

- Quite a few successful movies end up with lower than expected actual box office multiples because they are heavily frontloaded. In other words: people flock to the cinema on the opening weekend for all those highly anticipated blockbusters. And since the opening weekend has such a strong box office it’s difficult not to experience severe drop off. All the Harry Potter movies are a victim of this phenomenon, for example. Deathly Hallows Part 1 has an actual box office multiple that would correspond to a Cinemascore of C+ (a failure) but with almost 300 million domestic it was a success. Actual Cinemascore was “A”, but using this one would say the movie seriously underperformed.

So, what does this all mean?

When it comes to predicting final box office, Cinemascore doesn’t give really accurate predictions. Your A+ movie with a box office multiple of 4.8 might end up with an actual multiple of 2.31, which means most people went to see your movie on the opening weekend and that after that the movie more or less quickly collapsed at the box office.

Also: both Rotten Tomatoes and IMDB are easily as good at predicting final box office based on user scores as Cinemascore. With a few caveats however, Cinemascore has its score on Friday night, whereas you should probably wait until Sunday night or so for IMDB and Rotten Tomatoes to have somewhat accurate scores. Also, IMDB and RT scores can still change considerably after the first weekend.

But it can be said with some confidence that the final user scores on Rotten Tomatoes and IMDB are as good or reliable as the Cinemascore. They are all not very good at predicting final box office.

Also, the much maligned Rotten Tomatoes Audience Score shows a good correlation with Cinemascore. Which may surprise some since the one is said to be scientific and the other not. But the correlation does exist and can’t be argued away. It seems the trolls and other basement dwelling fanboys said to manipulate the Rotten Tomatoes scores either do a very bad job, on average, or are only very few in numbers.

The takeway is that Rotten Tomatoes and IMDB are both as good or bad as Cinemascore when it’s about the user scores themselves and box office. Of course, Cinemascore also provides movie studios with a demographic breakdown which is quite important, and it’s a clear indicator if the marketing of the movie was successful and the trailers accurate and not misleading. So the service does absolutely have its uses but it would be wrong to put too much confidence in a Cinemascore and say “See, the movie has an “A” Cinemascore! Audiences love it!” Any other user score is as accurate at least in terms of box office success – on average, there will always be a few outliers.

Also, as said in the introduction, Cinemascore is not really about popularity, and more about a meeting of expectations.

Finally, The Last Jedi had a Cinemascore of A. But the actual box office multiple was 3.23 instead of 3.6. A 3.23 corresponds to a B+. Still not bad, but already considered somewhat of a disappointment. You could say it’s another sign the movie underperformed. The core audience on Friday night might have given the movie an A, but in the following weeks the movie didn’t have the legs it was predicted to have.

However: on average the Cinemascore “A” movies in my sample have an actual box office multiple of 3.18 (B+), but the standard deviation of 0.84 means the average score is more or less meaningless, since roughly 68% of all Cinemascore “A” movies will be between a 2.34 (a C-) and a 4.02 (an A). Your “A” movie could turn out to be pretty much anything. The Last Jedi’s actual box office multiple is absolutely normal and very close to the median multiple for A movies.

Well, I hope at least one or two people are still with me. I know this was a bit much, but I hope you can take away a few things from this very long article. User scores, no matter where they come from, are not really meaningful when predicting box office. And Rotten Tomatoes is as good at it as Cinemascore, the two are even correlated, which shows the Tomatometer based on audience scores is much better than its reputation, when you consider Cinemascore a highly scientific score.

Anyone interested in the raw data (an Excel sheet with 249 movies) please says so in the comments. I might provide the raw data later via Dropbox or so.

Sources:

Cinemascores

How Cinemascore works, article on Deadline

Box Office Results on Box Office Mojo

IMDB

Rotten Tomatoes

Related Links

-Click HERE to return to the home page-